Investigators are human too and can also be prone to the same biases as anybody else.

Investigation reports ending with a conclusion of ‘pilot error’ give the illusion that the cause has been determined. This is, however, not a sufficient explanation for an occurrence. Remember, human error is the starting point, not the end point. Why did that error occur? Continue asking ‘why?’ until you’ve discovered all the factors contributing to that error. It’s only through understanding the ‘why?’ that you can then make effective safety improvements.

Investigation reports ending with a conclusion of ‘pilot error’ give the illusion that the cause has been determined. This is, however, not a sufficient explanation for an occurrence. Remember, human error is the starting point, not the end point. Why did that error occur? Continue asking ‘why?’ until you’ve discovered all the factors contributing to that error. It’s only through understanding the ‘why?’ that you can then make effective safety improvements.

When making decisions or judgements, humans use what we call ‘heuristics’. You might know these by the more common name ‘rules of thumb’. These are short cuts our brains take to make information processing more efficient. It’s an amazing feature of our brains but the downside is the potential for these rules of thumb to lead to biases. Everyone is prone to bias, including investigators, so it’s something investigators must be very aware of otherwise they can arrive at the wrong conclusions. Below are some of the most common investigator biases.

If an investigation report states ‘the pilot should have….’ or ‘the engineer could have…..’ or ‘it would have been better to….’ the investigator has used hindsight bias to judge and blame the individual for their actions rather than try to understand why those actions made sense at the time, given the information available. Remember, unlike the investigator, the person did not have the advantage of knowing the outcome at the time of their decision.

Image source: Sidney Dekker ‘The Field Guide to Understanding Human Error.’

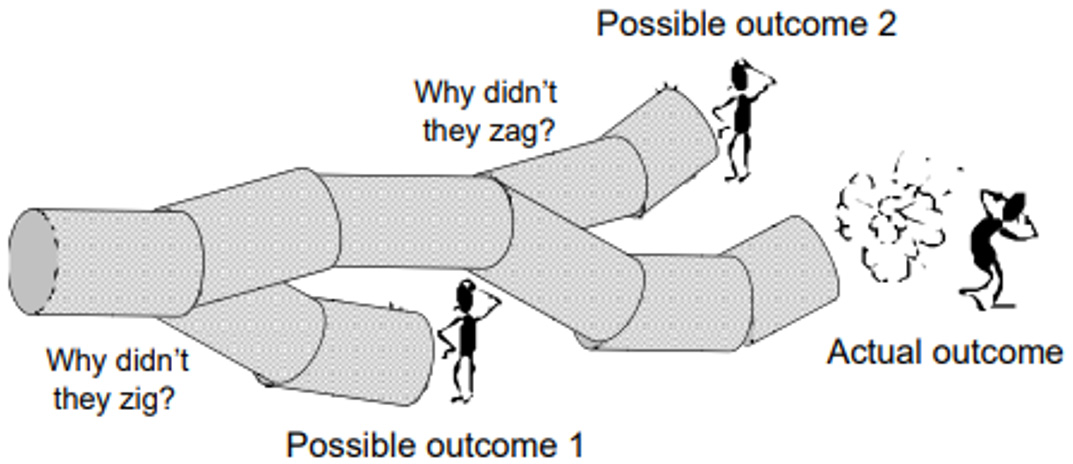

Counterfactuals: Going back through a sequence, you wonder why people missed opportunities to direct events away from the eventual outcome. This, however, does not explain failure.

Image source: Sidney Dekker ‘The Field Guide to Understanding Human Error.’

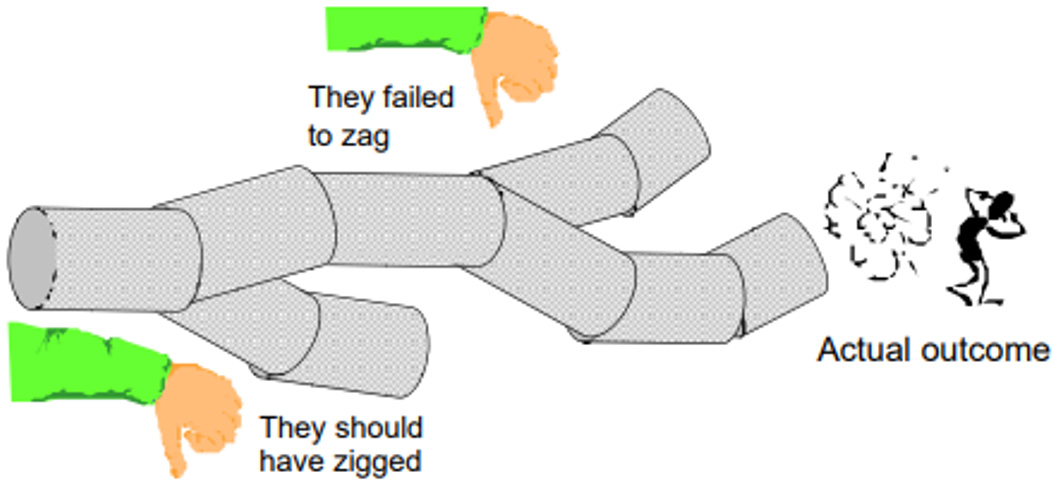

Judgmental: By claiming that people should have done something they didn’t, or failed to do something they should have, you do not explain their actual behaviour.

As investigators, we might tend to focus on the person(s) who just happened to be the one in the seat at the time. 'The pilot didn’t do [X] so it was pilot error that caused the accident'. This can lead to the assumption that the pilot (or controller, or engineer) is somehow flawed. But if that person is influenced by organisational or environmental factors, the same error could have been made by another person. This is often referred to in safety investigation as the ‘substitution test’. If another pilot with the same skill and experience was put in that situation, could they have made the same error? If the answer is yes, then it’s a system issue, not an individual error.

As investigators, we might tend to focus on the person(s) who just happened to be the one in the seat at the time. 'The pilot didn’t do [X] so it was pilot error that caused the accident'. This can lead to the assumption that the pilot (or controller, or engineer) is somehow flawed. But if that person is influenced by organisational or environmental factors, the same error could have been made by another person. This is often referred to in safety investigation as the ‘substitution test’. If another pilot with the same skill and experience was put in that situation, could they have made the same error? If the answer is yes, then it’s a system issue, not an individual error.

If you have any questions about this topic, email humanfactors@caa.govt.nz